OIML BULLETIN - VOLUME LXVI - NUMBER 3 - July 2025

e v o l u t i o n

Smart development for smart standards

Spencer Breiner , Jacob Collard

, Frederic de Vaulx

, Eric Simmon,

,

NIST , Gaithersburg, United States

Citation: S. Breiner et al. 2025 OIML Bulletin LXVI(3) 20250304

Abstract

This article looks at "smart standards": documents that integrate natural language with formal specifications such as data structures and algorithms. As standards documents begin to incorporate computational content, we anticipate both technological and sociological challenges. We envision that existing techniques and best practices from software development can help to address both these areas, leveraging computational tools like compilers and error checking to support the standards development process.

Introduction

Establishing confidence in measurements is crucial for fair trade, economic integrity, public health, and safety. Legal metrology develops and enforces measurement-related regulations, providing the foundation for this trust throughout the lifecycle of measuring instruments. The International Organization of Legal Metrology (OIML) plays a vital role in this endeavor by promoting a 'quality infrastructure' that integrates metrology, standards, testing, quality management, and global infrastructure harmonization. Recognizing the ongoing evolution in this field, the OIML identifies digitalization as “an essential part of the wider digital transformation in the quality infrastructure,” [1] aiming to leverage digital technologies to foster innovation and adapt to new products, services, and legislations.

However, this shift towards digitalization is not without difficulties. The legal metrology landscape has significantly changed, moving from self-contained physical instruments to cyber-physical measurement systems that interface directly with supply chains and markets. While these advances offer new capabilities, they also introduce challenges such as increased system complexity and heterogeneity. This calls for legal metrology standards that are more adaptive, able to fit their context and respond to changes in the (physical, computational or regulatory) environment.

Taking these issues seriously will require a substantial transformation of scientific and industrial metrological activities, underpinned by two key elements: (1) digital data that is FAIR (Findable, Accessible, Inter-operable, Reusable), traceable, and SI-compatible, and (2) standardization that fosters consistency and interoperability. To meet these challenges, we envision a standards development process that integrates natural language with computational data structures to support an innovative and adaptive quality infrastructure. Current conceptual definitions may be incomplete or overcomplete, either omitting important background assumptions or including typical features which are common but inessential; sometimes, both at once. The sheer quantity and complexity of information, which is often spread over multiple documents managed by different committees, can be overwhelming. Modern measurement systems generate extensive data, but the precise form of these data structures is rarely specified, leading to duplicate work and incompatible results among different standards clients. Automation and a direct connection from measurements to markets heightens the risks associated with misapplication and shortens the time-frame available to address such issues.

To address these challenges, we propose a reconceptualization of both the form that standards take and the processes we use to produce them. In particular, standards involving computational entities like data structures and digital sensors must evolve into computational documents themselves. Such documents would embed formal representations, such as schemas and algorithms, alongside the natural language currently employed. A strong integration between formal and natural language is crucial for ensuring that standards can adequately govern and interact with systems that include both human and digital agents. To maintain alignment between the computational and linguistic components of these future standards—and the real-world processes they oversee—a more meticulous approach to language use will be required, especially across multiple documents or contexts. Combining techniques from software development and natural-language processing can help.

For the purposes of this article, we will use the term smart standard to refer to a standards document in which the content is provided in a formal or logical specification alongside or in place of natural language.[2] Intuitively, smart standards incorporate both natural language and computational specifications. This distinguishes them from existing machine-readable standards, which typically only encode the document's structure (e.g., sections, references) rather than the substantive, domain-specific knowledge contained within the text itself. The development of such smart standards represents a critical step towards achieving the FAIR data principles and managing the complexity introduced by modern, interconnected measurement systems.

As standards increasingly come to resemble software products, we expect that social mechanisms and support technologies from software development will work their way into standards development as well. Examples include better indexing of dependencies between standard terminology, more granular change management, and compositional validation of interacting concepts. At the same time, the necessary rigor of computational specifications will push back against some pathologies of contemporary standards development, notably a (misguided) drive for premature standards development. Though these changes may be driven by the introduction of smart standards, the changes themselves are primarily social and methodological, and progress in these areas need not wait for computational documents. However, smart standards will provide additional affordances needed to support technological solutions to these issues. In aggregate, we will refer to these process modifications as smart development.

Smart Standards

Prose is the core communication mechanism in standards today, although other elements like tables, diagrams and even computational schemas also play a role. Language is a powerful and flexible way to bridge the abstract and the concrete and relate a wide variety of concepts to real or possible situations. All this will continue to hold in an era of smart standards; computation will supplement natural language, not replace it.

Despite its utility and ubiquity, natural language is the source of many challenges for standards development and communication more generally. Language is ambiguous, vague, and subjective. Minor variations in language use (such as an individual’s understanding of a word’s meaning) can have significant impacts, and differences in grammar due to regional or individual variation can cause confusion or misunderstanding. Translating one language into another precisely is extremely difficult, if not impossible in certain situations, not to mention costly. In the rapidly changing world of information technology, usage can change extremely quickly. The term “artificial intelligence” refers to a very different set of entities today than it did even five years ago.

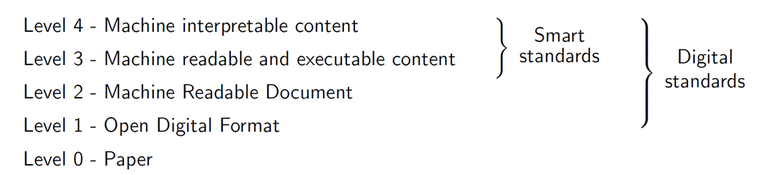

Table 1. IEC levels of digital standards (IEC, 2023).

To begin thinking about the affordances of smart standards, we propose an analogy between software development and standards development. Both are concerned with the production of abstract specifications which govern activities in the real world, and both are (often) large-scale endeavors incorporating inputs from collaborators around the globe. Standards committees today work with PDFs and paper, but, in the future, we expect that standards documents will incorporate additional elements that look more like code. This provides a tremendous opportunity to borrow and adapt tools and best-practices from software development to better support this work.

To adapt this to the context of standards, we find it useful to consider a spectrum of formality with natural language at one end and formal syntax on the other [3]. In between, we find semi-formal specifications like controlled natural language (e.g., the technical use of “may” vs. “shall” in standards documents) and pseudo-code (high-level, language-agnostic algorithmic descriptions). As we move along the spectrum, we trade (more ambiguous) simplicity against (more onerous) precision.

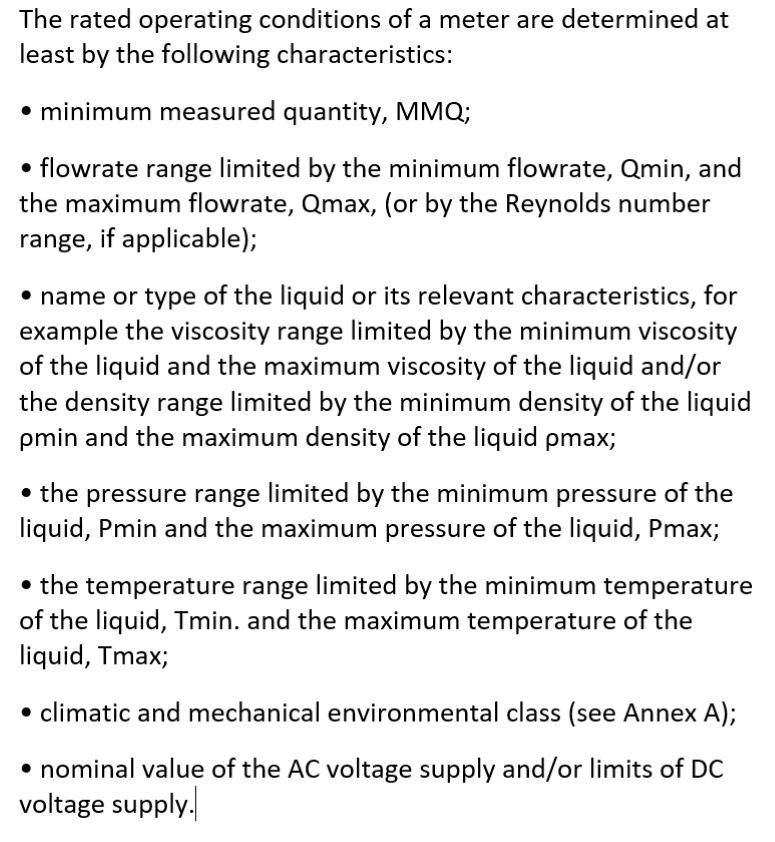

Similarly, the level of precision within a standard is not uniform; some requirements must be highly detailed while others can be given at a high level. Compare, for example, two requirements from the OIML standard on “Dynamic measuring systems for liquids other than water” (OIML R 117-1;2007 [4]). Requirement 2.16.3 states “It shall not be possible to bypass the meter in normal conditions of use (See note in Annex B)”. This is a high-level assertion and, as is often the case, it is clarified by non-normative content in an appendix like examples or possible (but not required) solutions.

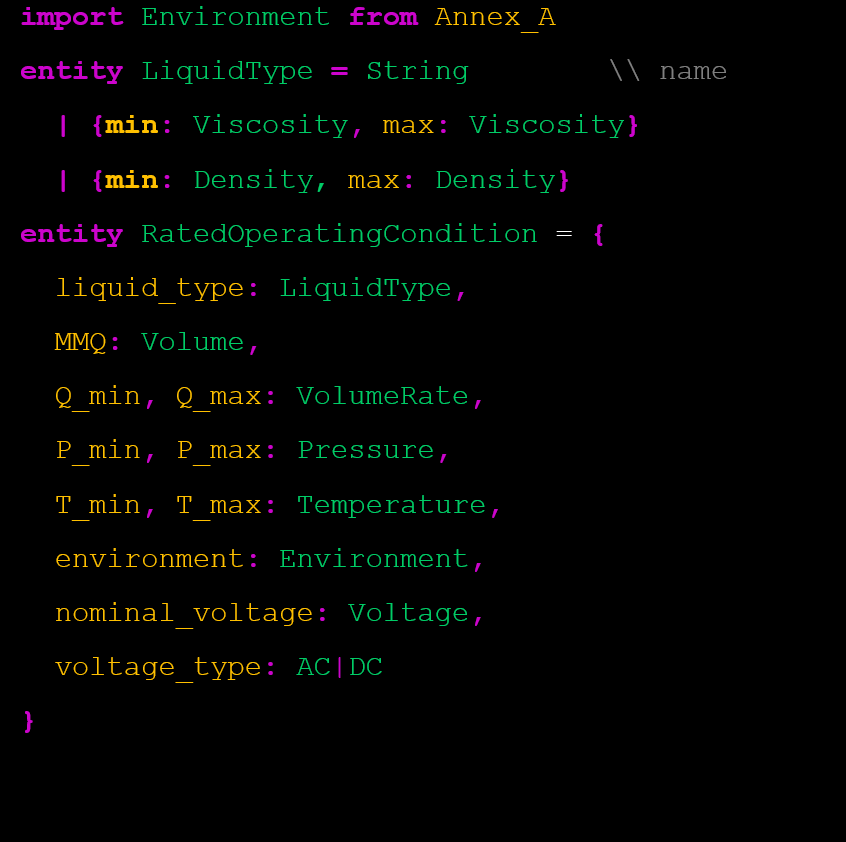

Compare this to Requirement 3.1.1.1, which specifies the rated operating conditions of a flow meter by spelling out a list of required parameters (temperature, pressure, viscosity and so on). This is much easier to translate into a formal language. In computer science, a schema is a predefined format or template that specifies how data should be arranged, labeled, and related to other data, enforcing consistency and clarity. This reduces the complexity of interaction between independent parties by standardizing the interface through which they communicate. Here we use the term quite broadly; depending on context, a schema might be written for a database, a web interface, a scientific ontology, or a machine-learning pipeline.

Table 2 shows a translation of Requirement 3.1.1.1 into a formal schema. Entities are shown in green, while relationships are yellow. The latter can be thought of as “has a” relationships, so that the first line within the specification of RatedOperatingCondition can be read as “a rated operating condition has a minimum measured quantity (which is expressed as a volume).”

Table 2. Representation of "rated operating conditions" [4] in a formal schema.

Any schema is written in a schema language, and these languages have different features. For example, database schemas are “flat”, in the sense that one table cannot contain another (though it can include a pointer to another table); other types of schemas allow one entity to nest inside another. Table 2 shows the encoding of the OIML definition of “rated operating condition” into a hypothetical schema language, which includes complex entities LiquidType and Environment nested within RatedOperatingCondition. The features of our hypothetical language are shown in magenta, including new entity declarations (entity … = …), import statements (import … from …) and constructors for alternatives (…|…) and aggregates ({…,…}).

One might wonder, “If the translation to a schema is easy, why should we put it in the standard?” Although the translation process is easy, it is also indeterminate; if we ask three different engineers to formalize a schema from the natural language definition, we are likely to end up with three different names for each concept (e.g., T_max, Tmax, and max_temp). If a standard proscribes a formal schema for a concept, including these sorts of encodings, then all parties can easily exchange information and (optionally) share the cost of infrastructure like documentation and validation. Otherwise, different stakeholders are likely to construct independent implementations, leading to interoperability challenges and duplicate work.

The ability to view content formality on a spectrum allows us to consider measuring the formality of content. Such metrics could be used in a few different ways. One option is risk management for ambiguity, combining measured levels of formality with the consequences of misinterpretation to help select targets for formalization. We can also imagine contextual enhancement, where lower levels of formality trigger the inclusion of additional clarifying content, helping standards users to better understand the intention behind an informal definition, reducing the range and impact of possible misinterpretations.

Smart Development

Robust standards are science-based and industry-driven. When done well, standards create a common language, provide for open markets and business opportunities, and protect consumers and the environment. However, when the standards development process is poorly executed, standards can create barriers to trade and close markets, give unfair advantages to countries or companies, stifle innovation, impede interoperability, and entrench inferior technologies. Instead of enabling new markets and new technologies, poorly defined standards represent an economic risk.

Standards development today is a challenging process, incorporating and synthesizing the detailed domain knowledge of diverse stakeholders with different objectives and criteria for success. Reaching a common understanding and crystallizing it in prose is no easy task. Standards clients are more demanding than in the past, expecting standardization for emerging technologies where definitions and best practices are not yet settled. Correspondingly, development periods for standards are shrinking.

The shift to smart standards will only exacerbate this trend. Today, small inconsistencies or conflicts that might occur, either within or between standards, are resolved through the good judgement of professional engineers. Once standards documents are incorporated directly into a digital infrastructure, we will no longer be able rely on the filter of common sense. Moreover, the cost of errors and bugs will go up, while the timeframe to fix them goes down.

As we begin the process of introducing “smart” elements into standards, it will be important to take an incremental approach. Within a computational system, each element provides both affordances and obligations; we introduce a new data type or algorithm because of the new capabilities it allows, but we also incur the cost of updating and maintaining those elements as other parts of the system change. This is called “technical debt". Moreover, experience has shown that is much easier to introduce a new element into a computational system (extension) than to remove an old one (deprecation). Put together, these two observations argue for being extremely careful and methodical when introducing new computational elements to a system.

So long as humans mediate the application of standards, conflicts are easy to paper over, but for computations this sort of mis-aligned abstraction leads to codebases that are larger, more brittle and less interoperable. This suggests a core-periphery model for smart-standards development; to borrow a phrase, “If you can't say something right, then don't say anything at all.”

In this approach, we would only introduce formal specification for core elements of the natural language specification that are clear and stable. Concepts which lack broad agreement among domain practitioners should be left out. Rather than codifying these “slippery” concepts right away, smart standards should instead provide a peripheral space to act as pre-standardization clearing-house for alternative proposals, reference implementations, and best-practices. Sometimes, broad adoption will push elements of the periphery into the core; in other cases, the persistence of competing alternatives will reveal distinctions in context or goals that were unclear at the outset. Either way, we should approach smart standards as living documents, with the expectation that they will grow and change.

Another area for improvement is requirement-driven development. In many areas of engineering, not just software, the collection of stakeholder requirements is a first step in towards developing a robust solution. These are used to guide the entire development process, helping to select between alternative designs and to assess the final product. For standards, requirements collection and validation are usually much more informal. A standards committee is usually given the scope and justification for a standard, but this may also be supplemented with partial solutions such as outlines or initial drafts. This runs the risk of path-dependence, where the goals and motivations of the first party to contribute are most likely to be reflected in the final document. A more uniform and independent process for collecting this sort of pre-standardization data from all participants – as an input for the committee – would help to balance competing goals and minimize groupthink on proposed solutions.

Tracing Definitions

In the interest of concreteness, we specialize our discussion in this section to one part of standards development – definitions – with a particular focus on short-term interventions that need not wait for the development of full-fledged smart standards. Definitions play a crucial role in standards, delineating the proper use of certain terminology within and between documents. Indeed, many standards organizations already have guidelines for formatting terminology in standards, typically organized around the idea of an intensional definition, which “conveys the intension of a concept by stating the immediate superordinate and the delimiting characteristic(s)” (ISO 704, 2022 [5]). Intensional definitions are preferred because they clearly reveal the differences between concepts in a concept system.

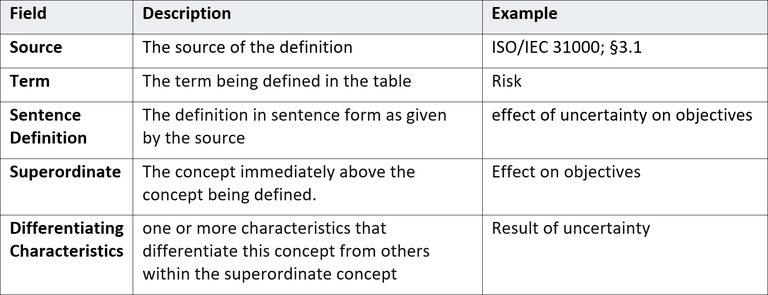

However, the structure of language and grammar in sentence form can sometimes hinder clear intensional definitions. A schematic perspective can help, even in the absence of a formal language. A semi-structured schema is an organizational structure for linguistic data; the structure is formal, but the content is not. These provide some of the conceptual benefits of formal schemas without requiring substantial training for developers and subject-matter experts. They decrease cognitive load by encouraging developers to focus on essential elements rather than large spans of prose. By using semi-structured schemas, standards developers can create more precise and clear definitions, which can improve the overall quality of standards, and prepare the ground to incorporate these definitions into smart standards in the future.

An example is the intensional definition table shown in Table 3, which decomposes proposed definitions into their essential components, including the source of the definition, the term being defined, the definition in sentence form, the superordinate concept, and its differentiating characteristics. This is illustrated for the definition of “risk” provided by the ISO 31000, a guide to risk management. The definition is written as follows: “effect of uncertainty on objectives” [6]. Leitch [7] critiques this definition, largely on the basis that “Risk is not an effect on objectives.” Whether we agree with the critique or not, the intensional definition table reveals quite clearly that this is what the definition asserts. The table can also be extended to include extraneous items such as redundant or optional characteristics, which are often present in existing definitions.

Another readily available approach relies on natural language analytics, tools that can automatically analyze free-form language use to produce useful representations, statistics, information retrieval, or recommendations. These analytics tools can run in the background during standards development, requiring no additional effort on the part of standards developers. This is already quite useful for indexing and searching within the corpus of standards; in our experience, tracking definitions across documents is a significant friction in development meetings today. More substantive analyses can help to identify and correct conflicts as they arise.

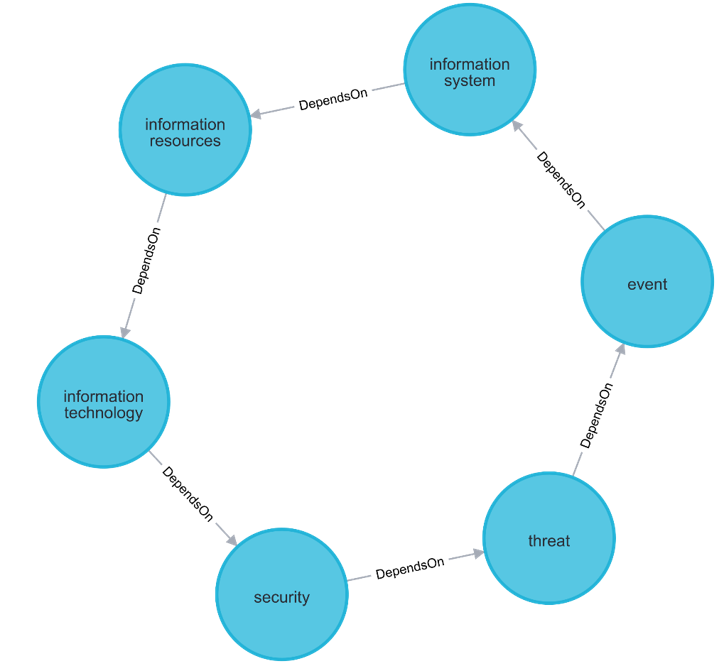

A good example here involves definitional dependencies, which occur when the definition of one term involves the use of one or more defined terms, drawn from either the same document or another. The logical validity of this approach relies on an absence of circular definitions, e.g., A refers to B refers to C refers to A.... Though humans are remarkably good at maintaining semantic consistency within their own domains, when large systems of definitions are written by multiple experts from different domains, tracking global features like cycles becomes a real issue. Our problem here is that the standards documents are optimized for humans (i.e., written in natural language) and humans are bad at tracking large dependency graphs. By extracting and exposing that graphical structure alongside the natural language, we can identify cycles computationally (Figure 1), providing immediate validation and feedback to the standards developer.

Table 3 A semi-structured schema for intensional definitions.

Figure 1. A six-step definitional cycle extracted from the NIST Risk Management Framework standard [8].

These issues become especially acute when we consider dependencies among many documents. For a real-world example, consider the joint ISO/IEC/IEEE standards on “Software, systems and enterprise”. Among these documents are two – 42010 on “Architecture description” and 42020 on “Architecture processes" – which contain two closely related concepts. The 2011 version of 42010 [9] included a definition for “entity of interest": “[the] subject of an architecture description". A few years later, ISO 42020:2019 [10] found need for an analogous concept, but the 42010 definition depends on another concept – “architecture description” – which is internal to 42010 and does not make sense in the context of 42020. Instead, they wrote up a new definition for “architecture entity": “thing being considered, described, discussed, studied or otherwise addressed during the architecting effort.”

The second definition is an improvement, no doubt, but at the cost of maintaining two terms and definitions for more-or-less the same concept. This might seem like a small issue, but today, working drafts for additional documents (42024, 42042) adopt the terminology of 42010 while using the defining phrase from 42020. This is a recipe for confusion and inconsistency, and unfortunately an opportunity for harmonization was missed when 42010 was revised in 2022 [11].

The Benefits of Formality

Working formal specifications into standards documents is no easy task. One of the most significant benefits of natural language is that it requires no specialized knowledge on its own. Almost every human being knows at least one language, and experts in any given domain already are aware (at least implicitly) of specialized language in their domain. Programming languages, on the other hand, require a great deal of time and effort to learn, and it would be an unreasonable burden to require standards developers to learn principles of programming and data management in addition to subject matter expertise, consensus building processes, organizational procedures, etc. It seems likely that this will require specialized tooling.

For programmers, an integrated development environment (IDE) is an editor optimized for programming. It manages interactions between the codebase, stored and edited as text, and language infrastructure for executing, testing and debugging programs. Correspondingly, we imagine an “IDE for standards", a custom environment for authoring standards with built-in management of text-to-computation linkages and verification of semantic requirements.

Such an interface would support many of the ideas we have raised above. Within an IDE, definitional dependencies are analogous to hyperlinks, and we can follow those references with just a click. When programming against a schema, support tools like compilers can instantly analyze a definition, highlight errors like typos and missing values, and often suggest appropriate corrections. It is worth noting that these mechanisms are cheap, fast, local and deterministic, unlike modern language models which, though they have much broader capabilities, also introduce much greater uncertainty into the results.

Another area where automated assistance is invaluable is namespace management, which we may think of as the harmonization of independently developed terminology. Arguments about naming can be surprisingly contentious [12], and often get in the way of more important issues like identifying good definitions. Naming collisions are inevitable; common words like “signal” and “object” are bound to be used in different ways for different contexts. Modern programming languages work around this by making imports explicit (cf. first line of Table 2) and, in the event of a naming collision, requiring the author to disambiguate conflicting terms. These sorts of mechanisms for namespace management would allow standards developers to harmonize definitions while “agreeing to disagree” about naming conventions, with the same underlying structure translated into each party’s preferred nomenclature.

Whereas namespaces manage the alignment between interacting concepts within a single piece of software (or standard), version control concerns the alignment between interacting software packages. It is a fact of life in both standards and software that one must occasionally update an existing specification. In either case, preparing these updates is a collaborative enterprise, synthesizing the views of many contributors, and pushing these changes out to existing workflows can be quite delicate.

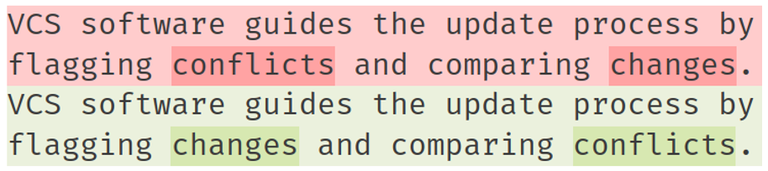

Software developers use version control software (VCS) to support this process of collaborative update. Shared code is stored in a repository, which may be public or private. Typically, all collaborators can read the existing codebase, while only a few administrators are permitted to make changes directly. Other contributors work in parallel to the main codebase by splitting their changes into separate “branches". When these changes are merged back into the primary codebase, VCS software guides the update process by flagging changes and comparing conflicts (see Figure 2). VCS also provides a communication mechanism to track bug reports and other user feedback, with direct indexing to existing code and future updates. It is not hard to see how more timely updates, granular change propagation and better issue tracking could have helped to address the definitional inconsistencies discussed in the previous section.

In fact, there are several ongoing pilot projects to develop just such an environment.[13] However, it seems that these attempt to “own the interface", providing a web front-end to a privately managed backend database. A more open approach might take advantage of the “Language Server Protocol", a mechanism that allows users to import custom behavior into the editor of their choice. This is the default approach to supporting rich interactions for programmers because the effort necessary to develop a language extension is typically much less than the IDE itself. More generally, while it makes sense for larger SDOs and standards clients to lead the way on smart-standards development, providing a shared platform of digital infrastructure across organizations will reduce duplicate effort and simplify interaction across boundaries.

Figure 2. A modification flagged by version control software.

Conclusion

To maintain relevance in an increasingly digitized world, standards must evolve into digital artifacts. The inclusion of computational content will entail substantial changes, both to the documents themselves as well as the processes we use to produce them. Executed well, the transition to smart standards will use the complementary affordances of formal logic and natural language to support each other's deficits. Done poorly, it will instead load additional obligations onto overworked standards committees while providing neither the training nor the motivation to support these changes.

Research questions abound, ranging from technological questions about computational documents to sociological issues of consensus in communities of practice. Many of these issues go beyond standards, per se – computational documents and governance processes are relevant for many domains – and we should expect to mine insights from many different communities of domain specialists, engineers, computer scientists, linguists and others. By now it is also clear that large language models (LLMs) will play a role in future standards development, likely helping to synthesize discussions into drafts and translate natural-language specifications into more formal models. However, using these tools to elicit and encode domain knowledge is currently unreliable, and better mechanisms are needed to compare the resulting formal models with experts' intuitive understanding.

To close, we note that, perhaps counter-intuitively, the introduction of smart standards may be the beginning of the end for the standards document. Today, on paper, each standard has a definite organization into sections, paragraphs, etc. For smart standards each of these items is marked and stored – the content remains the same – but the ordering of these items within and between documents becomes secondary, even superfluous. Rather than distributing standards as static documents that are the same for everyone, we imagine “standard reports” that are specifically adapted to their context. These would aggregate all and only those details relevant at the point of application, possibly drawing from multiple, overlapping standards, and harmonizing these viewpoints to produce a computationally-traceable certificate of conformance or an actionable list of unmet requirements.

Disclaimer

Certain equipment, instruments, software, or materials are identified in this paper in order to specify the experimental procedure adequately. Such identification is not intended to imply recommendation or endorsement of any product or service by NIST, nor is it intended to imply that the materials or equipment identified are necessarily the best available for the purpose. These opinions, recommendations, findings, and conclusions do not necessarily reflect the views or policies of NIST or the United States Government.

Acknowledgements

Our thanks to Katya Delak, Jan Konijnenburg and Ya-Shian Li-Baboud for background and discussions on legal metrology and the on-going digitalization of legal metrology. Thanks also to Jan Konijnenburg, Ya-Shian Li-Baboud and John Messina for review and commentary on an earlier draft.

References and notes

[1] Schwartz, R. 2021, Digital Transformation in (Legal) Metrology – The View of the BIPM-OIML Joint Task Group, OIML Bulletin Volume LXII, Number 3

[2] A recent report of the International Electrotechnical Commission (IEC, 2023: .) describes "SMART standards" as Standards for Machines that are Applicable, Readable and Transferable. This aligns well with our conception of smart standards (Table 1), but we prefer to drop the acronym.

[3] Heylighen, F. (1999). Advantages and limitations of formal expression. Foundations of Science 4, 25–56. doi: 10.1023/A:1009686703349

[4] OIML Recommendation R 117:2007, Dynamic measuring systems for liquids other than water

[5] ISO 704:2022, Terminology work – Principles and methods

[6] ISO 31000:2018, Risk management – Guidelines

[7] Leitch, Matthew, 2015, The fundamental flaws in ISO’s definition of `risk’. Working In Uncertainty. Published online. Accessed 14-05-2025. (link)

[8] NIST, 2018, NIST Risk Management Framework standard

[9] ISO/IEC/IEEE 42010:2011, Systems and software engineering – Architecture description

[10] ISO/IEC/IEEE 42020:2019, Software, systems and enterprise – Architecture processes

[11] ISO/IEC/IEEE 42010:2022, Software, systems and enterprise – Architecture description

[12] “There are two hard problems in computer science: cache invalidation, naming things and off-by-one errors”, Leon Bambrick

[13] ISO: https://www.iso.org/smart; Cenelec: https://www.cencenelec.eu/news-and-events/news/2025/brief-news/2025-01-16-smart-phase-1-launch/