OIML BULLETIN - VOLUME LXVI - NUMBER 3 - July 2025

e v o l u t i o n

The European Artificial Intelligence Act and Legal Metrology

Citation: F. Thiel 2025 OIML Bulletin LXVI(3) 20250306

Abstract

The European Artificial Intelligence Act (AI Act) has entered into force with its primary goal to promote the development and adoption of safe and trustworthy AI systems within the EU's single market. It sets up a new governance framework that enables coordination and support for the implementation of this regulation at the national level, while also building capabilities at the Union level, such as the AI Office and the AI Board. In this framework, the AI Act forces the Member States to designate supervisory authorities in charge of implementing the legislative, product-related requirements. Their supervisory function could build on existing arrangements established by the New Legislative Framework, for example regarding conformity assessment bodies and market surveillance institutions. Legal metrology is the application of legal requirements to measurements and measuring instruments, a practice that has been successfully followed for decades within the New Legislative Framework. The AI Act pertains explicitly to legal metrology. It refers to the use of AI models in recognized areas of legal metrology such as critical infrastructures and law enforcement. Consequently, it seems appropriate to provide insight into the AI Act and its terminology, and explore the impact of this new European regulation on legal metrology and how the AI-based software systems defined by the AI Act could be tackled with the existing requirements for software in measuring instruments.

1. Introduction

Measuring instrument sensors are developed to meet the legally required measurement accuracy. However, with the increasing sophistication of software environments, modern measuring instruments now use simple hardware sensors together with complex software systems. As a result, innovations, new functionalities, and business and service models are implemented in software, allowing individual customer requirements to be addressed based, for example, on user and system activity data collected during the whole life cycle of the instrument [1, 2, 3]. Such datasets form a foundation for the application of artificial intelligence systems (AI systems) to generate predictions, which are then utilized to create and enhance recommendations, decisions, and actions.

AI not only holds the potential to address complex challenges but also poses risks to privacy, safety, security, and human autonomy. Consequentially, it is widely agreed upon globally that effective governance is essential to ensure that AI development and deployment are safe, secure, and trustworthy, with policies and regulations that foster innovation and competition [4]. To this end, the European Parliament and the Council of the EU approved the Artificial Intelligence Act (AI Act), and it went into force on 1st August 2024 [5, 6] as one of the world's first legally binding pieces of legislation on AI. The various provisions of the European AI Act will become applicable over a period of 24-36 months.

Further international AI-related laws have followed since then. The California ‘Safe and Secure Innovation for Frontier Artificial Intelligence Models Act’ (SB-1047) [7] was published later in August 2024. This Act focuses more on regulating technology itself rather than its applications. On 10 December 2024, the Brazilian Senate approved Bill No. 2338/2023, which sets out rules for developing and using artificial intelligence in Brazil [8]. On 26 December 2024, the South Korean National Assembly approved the ‘Basic Act on the Development of Artificial Intelligence and the Establishment of Trust’, which will take effect in January 2026 [8]. The South Korean AI Act (SKAIA) addresses the technical limitations and misuse of AI by defining high-impact and generative AI as regulatory subjects, mandating transparency and safety obligations.

The New Legislative Framework (NLF) [9] and legal metrology are explicitly addressed in Recital 74, and metrology in Article 15(2) of the AI Act. Following the concept of the NLF the central purpose of the AI Act is to improve the functioning of the internal market by laying down a uniform legal framework, particularly for the development, placing it on the market, putting it into service, and using artificial intelligence systems in the Union. To achieve this, it is essential to implement procedures and engaging structures, such as competent Notified Bodies, to conduct conformity assessments before products enter the market. Additionally, market surveillance authorities and verification bodies should oversee the proper use, updating, and repair of these products once they are available to consumers. Legal metrology, among other Union legislation encompassed by the NLF, has successfully established and used these processes and structures for decades. Legal metrology is the inclusion of metrology – the “Science of Measurement” – in the legislation on the use of measurement results and measuring instruments. It establishes confidence in the correctness of measurements and the protection of users of measuring instruments and their customers.

The measuring instruments subject to legal metrology are governed by the Measuring Instruments Directive (MID) (2014/32/EU) and the Non-Automatic Weighing Instruments Directive (NAWIs) (2014/31/EU). Both directives cover a total of 14 categories of measuring instruments. It is estimated that MID and NAWID apply to 345 million measuring instruments sold annually in the European market [10]. Given the wide range of measuring instruments, it’s not surprising that an intersection between legal metrology and the AI Act is anticipated. One potential area of overlap is the use of utility meters – such as electricity, gas, water, and heat meters – in critical infrastructures, as outlined in Recital 55 and listed in Annex III of the AI Act.

At this time, the implementation of AI in legal metrology remains minimal, with applications being infrequent. It is assumed, that embracing AI technology could significantly enhance accuracy and efficiency in metrological practices, e.g. paving the way for more reliable measurements in various industries and streamlining processes in the legal framework. Esche et al. [11] have presented and investigated several classes of use cases for the application of AI in measuring instruments and for conformity assessment. Special practical aspects of applying artificial intelligence in metrology were discussed by [12]. The authors state, that the use of neural networks can help in solving a measurement problem in the case where the measurement function is unknown, not fully defined, or too complex for algorithmic formalization, while there is a sufficient number of experimental data suitable for training the neural network. The authors of [13] have reviewed their research in the area of trustworthy machine learning also from a metrology perspective, with an emphasis on uncertainty quantification. Explicit applications of machine learning in legal metrology were discussed by [14], where a new approach to instrument evaluation using machine learning algorithms that are capable of preemptively detecting failures is described.

Promising examples of AI module applications in legal metrology regarding the support of processes within the life-cycle of measuring instruments could be the assistance of Notified Bodies in conformity assessment, specifically by examining operating system configurations [11] or autonomously checking manufacturers' documentation against standards and regulations [15]. Additionally, AI could be used to implement surveillance measures to detect anomalies during system use [16]. Specifically, there are tendencies that AI-based processes are now being used to autonomously detect anomalies in measurement data, identifying deviations from expected values and informing the verification authorities accordingly, who make the final decision. It has just started in Germany that information about a potential failure in the measurement is logged to be judged by a human afterwards (Smart Meter Gateway (SMGW), TR-03109-1 Anlage IV: Betriebsprozesse, Section 3.7 Anomalieerkennung [17]), representing Level 1 Autonomy.

However, the relevance of all these capabilities must be evaluated to determine if they necessitate conformity assessment and market surveillance activities under the MID and/or the AI Act.

If the European AI Act applies to these products, it will significantly impact on costs and administrative burdens for the European industry, challenge Notified Bodies and market surveillance and verification institutions alike regarding their competencies and the necessity to develop European-wide harmonised testing requirements. The latter is particularly crucial for further dismantling existing non-tariff trade barriers, especially regarding technical regulations.

In this context, it is considered important to provide the reader with an overview of the key components of the AI Act, an analysis under which conditions MID/NAWID instruments fall under the AI act and which obligations these new software systems must meet. In this investigation, we will evaluate the suitability of existing essential requirements and international guidelines for software to central terms of AI definitions. Clarifying the terminology of Legal Metrology and the AI Act and providing a fundamental overview of how AI systems are organized and operate would enhance understanding in this context.

To this end this article is structured as follows: To establish a common foundation of terms and technology section 2 provides a concise functional overview of AI systems based on international standards. Section 3 introduces the AI systems regulated by the EU AI Act and section 4 provides insight into the classification of these AI systems. On that basis, section 5 discusses the potential implications of the AI Act on selected processes and measuring instruments subject to legal metrology. Finally, section 6 provides a conclusion.

2. Artificial Intelligence Systems: A Brief Overview

The terminology, processes, and software techniques associated with the implementation of AI modules in measuring instruments are unfamiliar to the legal metrology community. Therefore, providing a brief overview of these areas is important for a better understanding, and will support the comprehension of the AI Act's definition.

The term Artificial Intelligence encompasses various methods aimed at optimizing IT systems to solve highly specialized problems. Most AI algorithms are based on neural networks, which harness the vast amount of data available today. The workings of biological brains loosely inspire these neural networks: they can process information autonomously, respond to it and learn to solve problems on their own. However, unlike the human brain, they are sensitive to even minor disturbances in the input data. Consequently, machine learning might not be able to solve a problem in a sufficiently stable manner. As AI modules are increasingly employed in highly sensitive industries and business domains, ensuring their accuracy, robustness and quality throughout their entire life cycle is crucial. Considering this, it is vital to understand that a comprehensive definition of such systems is pivotal.

The ISO international standard [20] and the OECD framework for the classification of AI systems [21] define an AI system as an engineered system that generates outputs such as content, forecasts, recommendations or decisions for a given set of human-defined objectives. AI systems do not understand; they need human design choices, engineering and oversight. Such oversight is useful to ensure that the AI system is developed and used as intended and that impacts on stakeholders are appropriately considered throughout the system life cycle. Therefore, the level of oversight varies based on the risks associated with the specific use case.

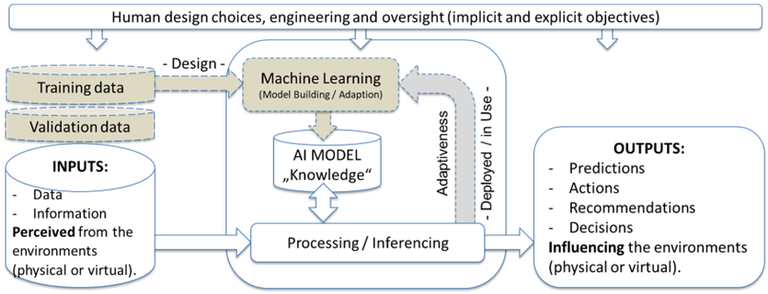

The functional view of an AI system is depicted in Figure 1 as a synthesis from [20] and [21].

Figure 1. Functional view of a machine learning-based AI system. Synthesis from [20] and [21].

Generally, AI systems contain a model which they use to produce predictions, and these predictions are in turn used to successively make recommendations, decisions and actions, in whole or in part by the system itself or by human beings. The model used by the AI system is a machine-readable representation of knowledge. Knowledge has various possible representations from implicit to explicit ones. Knowledge can also come from various sources, depending on the algorithms used, e.g. when and how data is acquired. That model can be either built directly or from learning on training data (machine learning (ML)-based AI systems). Classical expert systems or reasoning systems equipped with a fixed knowledge base are examples of directly built AI systems. In these cases, the system developers take advantage of human knowledge to provide reasonable rules for the AI system’s behaviour. ML-based AI systems involve learning. Initial learning, i.e. training, of the model entails computational analyses of a training dataset to detect patterns, build a model, and compare the output of the resulting model to expected behaviours, i.e. to validation data. This describes the form of “supervised learning,” in which the AI is given the solution to the problem (e.g., labelled data). There is also “unsupervised learning” in which the AI is not told the solution because it may not be known, and there is “reinforcement learning,” where the AI is given direct feedback on the current result during learning. The resulting knowledge base is a trained model with finely tuned parameters that minimize prediction errors. It is based on a mathematical function and a training set that best represents the behaviour in a given environment. This initial training of an AI system typically occurs during the design phase of the product life cycle. The components represented by dashed lines in Figure 1 are intended for ML-based AI systems.Inferencing is the process that follows the training process. The ISO standard [20] defines inferencing as reasoning by which conclusions are derived from known facts, rules, a model, a feature or raw data. So, inference is the process of running new, unseen data through a trained AI model to make a prediction or complete a task. Data as input to the AI system might be perceived from the physical or virtual environment. Inferencing encompasses preprocessing the data (e.g., scaling, normalization, extraction of relevant features), feeding the data in the trained model, then the model generates predictions based on these input data and finally, the system uses these predictions for real-world decisions or actions.

During the use phase in the life cycle, the AI model may undergo adaptation.

Adaptation aims to reduce prediction uncertainty by introducing a new learning process that utilizes updated training data, including additional information or data gathered during use.

Input to an AI system can also be information instead of data, for optimization tasks where the only input needed is the information on what is to be optimized. Some AI systems do not require any input but rather perform a given task on request (e.g. generating some synthetic image, music or text (Generative AI)).

3. AI Systems Definition according to the EU AI Act

The AI Act solely applies to those systems that fulfil the definition of an ‘AI system’ within the meaning of Article 3(1) AI Act. The definition of an AI system is therefore key to understanding the scope of application of the AI Act. The term "AI system" in the regulation closely aligns with the efforts of international organizations focusing on artificial intelligence [20] and [21]. This alignment aims to provide legal certainty, promote international consensus and widespread acceptance, while also allowing for flexibility to accommodate the fast-paced technological advancements in this field [22]. Moreover, it is based on key characteristics of artificial intelligence systems, that distinguish it from simpler traditional software systems or programming approaches and does not cover systems that are based on the rules defined solely by natural persons to automatically execute operations (s. Recital 12, and [23]).

The EU AI Act defines an 'AI System' in Article 3(1) as follows:

‘AI system’ means a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.

That definition comprises seven main elements and two main phases: the pre-deployment or ‘building’ phase of the system and the post-deployment or ‘use’ phase of the system. The seven elements do not need to be present continuously across both phases. The definition recognizes that certain elements may exist in one phase, but may not carry over to the other [23].

The term “machine-based” refers to the obvious fact that AI systems run on machines. AI systems are designed to operate with varying levels of autonomy, meaning that they have some degree of independence of actions from human involvement and of capabilities to operate without human intervention (s. Recital 12). The adaptiveness that an AI system may exhibit after deployment, refers to self-learning capabilities, allowing the system to change while in use. AI systems can operate according to differently defined objectives. AI system objectives can belong to various categories that may overlap in some systems [22], e.g. explicit and human-defined, implicit in rules and policies, implicit in training data, or not fully known in advance.

Some systems may learn to help humans by learning more about their objectives through interactions. Some examples include recommender systems that use “reinforcement learning from human feedback” to gradually narrow down a model of individual users’ preferences.

A key characteristic of AI systems is their capability to infer. The capacity of an AI system to infer goes beyond basic data processing, enabled learning, reasoning or modelling [23]. Consequently, systems designed to enhance mathematical optimization, such as regression or classification meth-ods, remain within the realm of ‘basic data processing’ and therefore fall outside the definition of an AI system [23].

For the purposes of the AI Act, environments should be understood as the contexts in which the AI systems operate, whereas outputs generated by the AI system, reflect different functions performed by AI systems and include predictions, content, recommendations or decisions. AI systems can be used on a stand-alone basis or as a component of a product, irrespective of whether the system is physically integrated into the product (embedded) or serves the functionality of the product without being integrated therein (non-embedded).

Given that the definition of the AI Act is very broad, it is not surprising that it faces controversy. The European Law Institute (ELI), for instance, responded to the European Commission's that this definition lacks clarity in distinguishing AI from other IT systems [24]. Therefore, the ELI proposes a 'Three-Factor Approach' for identifying AI systems: 1) the amount of data or domain-specific knowledge used in development; 2) the system's ability to create new know-how during operation; and 3) the formal indeterminacy of outputs, where human discretion would normally apply. These factors operate in a flexible system where strength in one area can compensate for weakness in another. Generally, an IT system qualifies as AI when scoring at least three pluses across these factors, requiring a presence in at least two categories. This interpretation acknowledges the AI Act's intentionally abstract definition while aiming to balance technical neutrality with practical applicability in categorising AI systems.

In February 2025 the Commission published guidelines on AI system definition to facilitate the first AI Act’s rules application [23]. The guidelines on the AI system definition explain the practical application of the legal concept, as anchored in the AI Act. The Commission aims to assist providers and other relevant persons in determining whether a software system constitutes an AI system to facilitate the effective application of the rules.

4. Classification of AI Systems

This section introduces the AI Act’s risk-based classification of AI systems. This helps in understanding the protective assets and goals, along with the AI practices, products, and applications associated with specific risk classes and their respective obligations. It serves as the foundation for categorizing MID measuring instruments as derived in detail in Section 6.

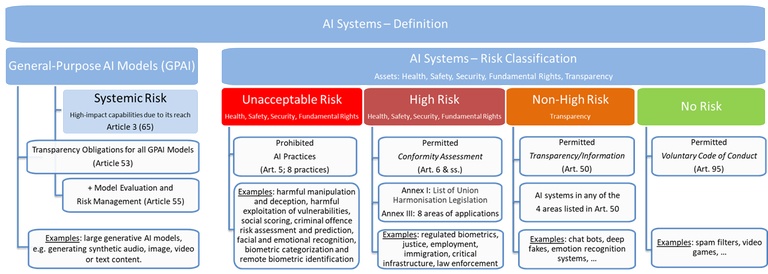

The AI Act establishes a tiered compliance framework with different categories of risk and corresponding requirements for each category. This classification includes four categories of risk ("unacceptable", "high", "non-high" and "no"), plus one additional category for general-purpose AI (s. Figure 2). All AI systems will need to be inventoried and assessed to determine their risk category and the ensuing responsibilities.

Figure 2. Tiered compliance framework of the AI Act.

AI Systems posing what legislators consider an unacceptable risk to people’s safety, security and fundamental rights – such as biometric categorisation systems based on sensitive characteristics, social scoring, or AI used to manipulate human behaviour – are banned from use in the EU (Article 5 Prohibited Artificial Intelligence Practices).

High-risk AI Systems (Article 6(1, 2)) are considered to pose a significant risk of harm to the health, safety, security or fundamental rights of natural persons, and must undergo conformity assessments. Especially, if these systems are applied in certain fields of application, e.g. in critical infrastructure, education, healthcare, law enforcement, border management or elections (s. Annex III). These systems will carry the majority of compliance obligations (alongside general-purpose AI (GPAI) systems (Article 51) – see below). These obligations include the establishment of risk and quality management systems, data governance, human oversight, cybersecurity measures, post-market monitoring, and maintenance of the required technical documentation (Articles 8-15). Further obligations may be specified in subsequent AI regulations for healthcare, financial services, automotive, aviation, and other sectors, e.g., those listed in Annex I.

Non-high-risk AI applications require transparency obligations (Article 6(3), Article 50, Recital 53), and those representing minimal or no risks are not regulated. However, for the latter, the AI Act invites companies to voluntarily commit to codes of conduct.

Although conformity assessment is required solely for high-risk AI systems the new regulation includes novel provisions concerning GPAI models in Articles 51-56. Large generative AI models are a typical example of a general-purpose AI model (GPT-4, DALL-E, Google BERT, or Midjourney 5.1). While these technologies are expected to bring significant benefits soon and drive innovation across various sectors, their disruptive nature raises important policy questions regarding privacy, intellectual property rights, liability and accountability. Additionally, concerns have been raised about their potential to spread disinformation and misinformation.

These risks can significantly affect the market and may lead to negative consequences for public health, safety, security, fundamental rights, or society, with the potential to spread widely across the value chain. Hence, a further risk class labelled “systemic risk” is defined in Article 3(65) which refers to the risks linked to the high-impact capabilities of GPAI models (s. Figure 2).

Providers of general-purpose AI models with systemic risk must fulfil further obligations – in addition to the obligations listed in Articles 53 and 54 – outlined in Article 55, such as model evaluation and risk management.

These new rules introduce horizontal obligations for all GPAI models and therefore provide for a new more centralised system of oversight and enforcement involving new institutions and coordination on European and national levels. The collaboration of the Commission with legal metrology Institutions, e.g. WELMEC, is directly addressed in Recital 74 and 15(2), e.g. via the newly established EU AI Office. An in-depth analysis and a graphical representation of the interrelations between the players in this new system can be found in [25].

In the following, we will focus on high-risk AI systems. The classification rules for high-risk AI systems are provided by Articles 6(1) and 6(2) of the AI Act:

Article 6(1)

Irrespective of whether an AI system is placed on the market or put into service independently of the products referred to in points (a) and (b), that AI system shall be considered high-risk where both of the following conditions are fulfilled:

(a) the AI system is intended to be used as a safety component of a product, or the AI system is itself a product, covered by the Union harmonisation legislation listed in Annex I;

(b) the product whose safety component pursuant to point (a) is the AI system, or the AI system itself as a product, is required to undergo a third-party conformity assessment, with a view to the placing on the market or putting into service of that product pursuant to the Union harmonisation legislation listed in Annex I.

AI systems fall within the high-risk category if these products undergo a conformity assessment procedure according to relevant Union legislation listed in Annex I. The first part of Annex I provides legislation based on the New Legislative Framework. Such regulated products are machinery, toys, lifts, equipment and protective systems intended for use in potentially explosive atmospheres, radio equipment, pressure equipment, recreational craft equipment, cableway installations, appliances burning gaseous fuels, medical devices, and in vitro diagnostic medical devices.

The term ‘safety component’ is defined in Article 3(14) (and Recital 55) of the AI Act and means a component of a product or of a system which fulfils a safety function for that product or system, but which is not necessary for the system to function. Failure or malfunctioning of such components might directly lead to risks to the physical integrity of, e.g. critical infrastructure and thus to risks to the health and safety of persons and property.

Article 6(2)

In addition to the high-risk AI systems referred to in paragraph 1, AI systems referred to in Annex III shall also be considered high-risk.

High-risk AI systems according to Article 6(2) are the AI systems described in the eight areas listed in Annex III. These areas are: permitted biometrics (e.g. remote biometric identification systems; biometric categorization; emotion recognition); critical infrastructure (safety components in the management and operation of such infrastructure); education and vocational training; employment, workers management and access to self-employment; access to and enjoyment of essential private services and essential public services and benefits; law enforcement, insofar as their use is permitted under relevant Union or national law; migration, asylum and border control management, insofar as their use is permitted under relevant Union or national law; and finally administration of justice and democratic processes.

The Commission is empowered to amend Annex III by adding or modifying use cases of high-risk AI systems (Article 7).

5. Impact on Legal Metrology

With the background of the previous sections, the focus of the upcoming section will be on exploring the implications of the EU AI Act for legal metrology regarding the AI systems’ definition and risk classification. Specifically, we'll delve into how legal metrology can effectively tackle specific issues constituted by the integration of AI models within the existing legal framework of MID.

5.1. Impact of the Definition

The AI Act defines an "AI system" using specific terms to capture the structure and functions of such systems intended to be regulated as comprehensively as possible. These terms are in turn defined in the AI Act and more details are explained in [23] and [24]. The question needs clarification as to whether the definition of the AI Act can realistically be harmonised with the functionalities of existing MID systems. Even if the answer is ‘yes’ for a subset of terms, it must be verified whether adequate requirements already exist to evaluate such systems. In a further step, it could be speculated whether metrological systems that utilise AI models with such functions are conceivable.

While the definition of AI systems includes several concepts, the idea of learning in use – specifically, ‘adaptiveness after deployment’ – is the most unfamiliar for legal metrology. Therefore, we will focus on that concept.

Adaptiveness allows software or its crucial parameters, like the model's weights, to evolve and change over time. These software changes or updates could modify the system substantially, in the worst case unpredictably.

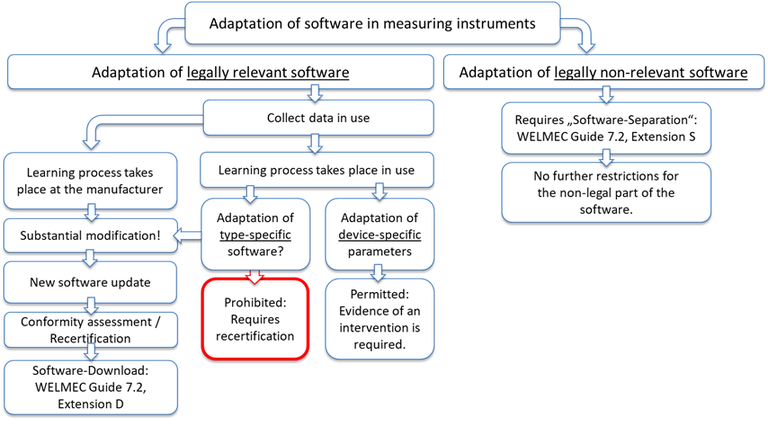

As the AI Act aligns with the New Legislative Framework, one can refer to the Blue Guide [9] for guidance on how to handle such a situation. The Blue Guide outlines the circumstances under which a software update is considered a substantial modification, thereby necessitating recertification. The first situation occurs when the update alters the original intended functions, type, or performance of the product in a way that was not anticipated during the initial risk assessment. The second case is established when the nature of the hazard has changed, or the level of risk has increased as a result of the software update. So, according to the first situation, changes made to the algorithm or performance of AI systems that continue to 'learn' after being placed on the market or put into service, are not considered significant modifications, as long as these changes have been predetermined by the provider and evaluated during the conformity assessment process (AI Act, Recital 128).

The same applies to legal metrology as long as learning is considered an adaptation of the AI model’s parameters/weights. For practical reasons, the international guidelines for software in measuring instruments provide acceptable solutions to accommodate this concept. To this end, OIML D 31 [18] distinguishes between type-specific and device-specific legally relevant parameters (OIML D 31, 3.1.26). Device-specific parameters are legally relevant parameters with a value that depends on the individual instrument, e.g. adjustment parameters – such as span adjustment – or corrections and configuration parameters (e.g. maximum value, minimum value, units of measurement, etc.). Type-specific parameters are legally relevant parameters, too, and have identical values for all specimens of a type. They are fixed during the conformity assessment and can only be changed in the course of a certified software download/update for all instruments in the market.

It is common for device-specific parameters to change over time, often due to daily recalibrations. These changes can happen without requiring re-certification or re-verification of the measuring instrument, provided that the adjustments do not constitute substantial modifications—that is, they do not alter the system's functionality (see Figure 3, middle). As long as any changes are properly documented and provide evidence of the intervention, they remain compliant with the requirements outlined in MID, Annex I, 8.3 (see Figure 3, middle).

To better address the specifics of "AI learning" using device-specific parameters, the new revision of OIML D 31:2023 tackles the AI challenge by specifying a "dynamic module of legally relevant software":

This dynamic module is a software module whose functional behaviour depends on predefined device-specific parameters that may change over time during use. Such dynamic modules may incorporate or utilise machine learning or artificial intelligence characteristics and processes.

To maintain the accountability of AI decisions, it is important to document training data, algorithm design, and possibly decision data for future validation. In OIML D 31 (2023), this is accomplished by specifying a "snapshot." This snapshot is a static representation of a dynamic module of legally relevant software at a specific point in time that can include the algorithm design (e.g. topology and weights of a neural network); the trail of the evolution of dynamic parameters of a module; and the evolved parameters of the dynamic parts of the module.

When the learning process involves adapting type-specific parameters or changing the model's structure – such as adding a new layer to a neural network – it may be considered a substantial modification and an update to the legally relevant software. So, a recertification will be required (s. Figure 3, left side). Consequentially, a learning process during use which changes type-specific parameters in an individual instrument, is not covered by MID (s. Figure 3, middle). It requires the software-update process outlined in Figure 3 on the left side.

Although the software-update process itself is currently not regulated under MID and therefore handled individually by the member states a procedure, which was harmonized by the member states for the software-update, is available from WELMEC (WELMEC Guide 7.2, Extension D [19]).

If the AI system is not part of the legally relevant software (s. Figure 3, right side), e.g. a GPAI module, MID Annex I 7.6 applies:

[…] When a measuring instrument has associated software which provides other functions besides the measuring function, the software that is critical for the metrological characteristics shall be identifiable and shall not be inadmissibly influenced by the associated software.

To ensure that legally relevant software remains unaffected by associated software, such as an AI module, WELMEC Guide 7.2 provides acceptable solutions for implementing software separation (as outlined in its Extension S). That encompasses defining communication requirements between these software components. This method enables the AI software to adapt as necessary while keeping a clear distinction from the metrological software, ensuring that it does not interfere with metrological functions (see Figure 3, right branch).

Due to the close cooperation of OIML and WELMEC regarding software requirements for measuring instruments, the new requirements laid down in OIML D 31 will likely be transferred into the WELMEC software guides [19, 26, 27, 28, 29] shortly.

Figure 3 illustrates the different, current applicable ways, of AI module adaptation, specifically learning, in measuring instruments.

Figure 3. Different ways of AI module adaptation in measuring instruments.

5.2. Risk Classification of Measuring Instruments in the Context of the AI Act

Article 6 of the EU AI Act outlines the classification rules for high-risk AI systems (see also Figure 2). Instead of directly describing AI systems, it references products that fall under the Union harmonization legislation listed in Annex I and the application areas defined in Annex III. The AI Act includes Union legislation based on the New Legislative Framework in Section A of its Annex I. Notably, the Measuring Instruments Directive (2014/32/EU; MID [30]) and the Directive on Non-Automatic Weighing Instruments (2014/31/EU; NAWID, [31]) are not included in this list.

It needs to be determined whether there are overlaps between the eight application areas listed in Annex III of the AI Act and those of measuring instruments covered by the MID. There are only two application areas where measuring instruments regulated under MID are used, these are critical infrastructures, e.g. for the supply of electricity, gas, heat and water, and law enforcement. In the following, we check whether measurement instruments fall under these two application areas of Annex III or not.

‘Critical infrastructure’ means an asset, a facility, equipment, a network or system, or a part of thereof, which is necessary for the provision of an essential service within the meaning of Article 2(4) of the Critical Entities Resilience Directive (CER) (2022/2557/EU). More concretely Annex III AI Act Nr. 2 defines “critical infrastructure” as an application area of AI systems intended to be used as safety components in the management and operation of critical digital infrastructure, road traffic and the supply of water, gas, heating and electricity. The term ‘safety component of a product or system’ was introduced in Chapter 5.

Measuring instruments categorized under the MID could appear in critical infrastructures. Such measuring instruments are, e.g. smart meters, intelligent metering systems, and the German smart-meter gateway [17]. However, these are not devices designed to fulfil safety functions for products or systems. Even if a measuring instrument were to encompass a "safety component of a product or system," as defined in Article 3 (14) of the AI Act, this function would not be considered relevant from a metrological perspective.

‘Law enforcement’ is the other potential application area of measuring instruments in Annex III. Law enforcement authority and Law enforcement are defined in Article 3 (40, 41) AI Act: The "law enforcement authority" refers to any public authority responsible for preventing, investigating, detecting, or prosecuting criminal offences, as well as safeguarding against and preventing threats to public security. "Law enforcement" refers to activities carried out by these authorities for the same purposes.

Please note, that the AI Act focuses on criminal offences, i.e. major violations of the law, and not on administrative offences – as legal metrology focuses on- which do not reach the punishable unlawful content of a criminal offence. It is important to note that whether a measurement indicates a criminal or an administrative offence depends on a threshold defined by national law. The classical measuring instruments used by the police in law enforcement are nationally regulated traffic speed measuring instruments and alcohol breath analyzers. Annex III defines in the letters a-e of its number 6 the AI systems considered high risk in the area of “Law enforcement”. These are AI systems intended to be used to assess the risk of a natural person becoming a victim of criminal offences; to be used as polygraphs (lie detectors) and similar tools; to be used to evaluate the reliability of evidence in the course of investigation or prosecution of criminal offences; to be used for assessing the risk of a natural person of offending or re-offending not solely based on profiling of natural persons as referred to in Article 3(4) of Directive (EU) 2016/680 or to assess personality traits and characteristics or past criminal behaviour of natural persons or groups; AI systems intended to be used for profiling of natural persons as referred to in Article 3(4) of Directive (EU) 2016/680 in the course of detection, investigation or prosecution of criminal offences.

These five application areas do not include any legally relevant metrological functions or metrological functions could usually not assist these applications. So, none of the measuring devices covered by MID or NAWID fall into these categories as long as the Commission is not amending Annex III by adding or modifying MID-relevant use cases of high-risk AI systems according to Article 7.

The remaining issue in the risk classification of MID instruments is whether the obligations for the non-high-risk category extend to measuring instruments. Transparency obligations for non-high-risk AI systems, as laid down in Article 50, exclusively apply to five classes of AI systems: First, to AI systems intended to interact directly with natural persons unless this is obvious from the point of view of a natural person. Second, to AI systems, including general-purpose AI systems, generating synthetic audio, image, video or text content. Third, to AI based emotion recognition system or biometric categorisation systems. Fourth, to AI system that generates or manipulates image, audio or video content constituting a deep fake; and finally, to AI system that generates or manipulates text which is published with the purpose of informing the public on matters of public interest.

Currently, it seems challenging to envision a metrologically relevant AI system that fits into one of these four categories which necessitates transparency obligations.

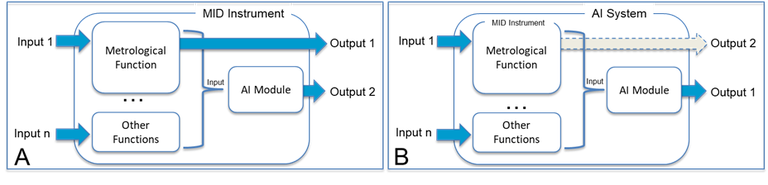

Nonetheless, MID Instruments may fall in the high-risk or non-high-risk class under special circumstances, e.g. if they encompass an AI-Act relevant module. This AI module, e.g., uses the metrological function's result and serves a purpose listed in Annex III or Article 50. Such a system is outlined in part A of Figure 4. Member states are already confronted with such applications, e.g. to support law enforcement in assessing the risk of a natural person becoming a victim of criminal offences according to Annex III.

Figure 4 A: MID instrument encompassing an AI module which uses the metrological Output 1 and the outputs of non-metrological functions to produce a non-metrological Output 2. B: The MID instrument is part of an AI system. Output 1: primary output. Output 2: secondary output.

Another possibility is that the MID instrument is part of a product that includes a component functioning like an AI system, such as for safety purposes, or the AI system itself is a product covered by the AI Act, s. part B of Figure 4. This case was up to now not discussed between the Industry and the Notified Bodies or was detected on the market by the Market Surveillance Authorities.

For both cases outlined in Figure 4, the mutual obligations for conformity assessment and market surveillance are individually required by the different acts.

As the AI Act includes elements and procedures from the New Legislative Framework, it is not surprising that the requirements for conformity assessment, while showing some AI-specific variations, significantly overlap with those of the MID. The essential requirements for high-risk AI systems (EU AI Act Chapter III, Section 2 (Articles 8-15)) include a risk management system, quality management systems, technical documentation, compliance with standards (e.g. for accuracy, robustness, and cybersecurity), record-keeping, transparency, and provision of information to deployers/users. A good example of overlapping is risk assessment. Risk assessment of software in MID is well covered in WELMEC Guide 7.6 “Risk Assessment” [26]. This WELMEC guide has developed in close cooperation with the users of these guides [32, 33, 34, 35, 36].

Besides these cross-sectional requirements, the AI Act sets novel requirements beyond those already known in legal metrology. Such obligations are those for effective data governance, enabling and conducting human oversight, registering high-risk AI systems on the EU database and not forgetting the transparency obligations required for non-high-risk systems.

However, in the scenarios sketched in Figure 4, the administrative burden could be significantly reduced, if the individually required obligations by the different acts could be delineated. In that way, separate certification and market surveillance activities are guaranteed. Legal metrology has already foreseen that possibility when a measuring instrument has associated software which provides other functions besides the measuring function, such as an AI module. In that case, the software critical for the metrological characteristics shall be identifiable and not be inadmissibly influenced by the associated software (MID Annex I 7.6). To effectively maintain the integrity of legally relevant software in relation to associated software, the WELMEC Guide 7.2 offers valuable solutions for achieving software separation, as detailed in Extension S. It also provides clear guidelines for establishing communication requirements between these software components, promoting a more reliable and compliant system.

5.3 Measurement Accuracy, Error Margins and Machine Learning

The reliability of measurements is one central aim of legal metrology. Reliable means that similar measurements in a comparable environment led to a comparable measurement result within the legally allowed error margin. That the measuring instruments do not exceed these error margins must be proven during conformity assessment or during the regular verification of the individual instrument in the market.

Therefore, it makes sense to propose machine learning for the reduction of the measurement uncertainty, i.e. to increase measuring accuracy 37[37]. However, in the context of legal metrology, the following should be considered. During the design phase of the system, it is essential to ensure that the measurement error falls within the legally defined margins. If improvements are envisioned to reduce the error margin below these legal limits – such as by utilizing machine learning with field data – there must be a clear benefit that justifies the investment in such efforts.

One potential benefit could be the expansion of standardized instruments' applications. Machine learning techniques enhance these instruments' characteristics, making them suitable for high-accuracy applications. This is particularly important in industries such as pharmaceuticals, where narrow error margins are essential and expensive instruments are commonly used.

Currently, the measurement accuracy of conventional physical sensors is much better than the permissible error margin. Therefore, sensor accuracy is only one component of the integral system error and leaves enough room for optimisation. The use of machine learning could therefore expand the application possibilities for low-cost sensors and thus reduce costs in various fields.

Please note, that the systematic exploitation of the error limits under the Measuring Instruments Directive (MID) using machine learning algorithms is prohibited, e.g. to make deliberate use of the permissible tolerances of measuring instruments to achieve certain advantages. This could, for example, lead to measuring instruments being calibrated in such a way that they always measure at the upper or lower end of the permissible error limit, which can result in a systematic favouring of one of the parties involved. Consequentially, such methods are not allowed and would be detected during conformity assessment.

6. Conclusion

The European AI Act defines what an AI system is, even though its scope is quite extensive. On that basis, the AI Act intends not to hamper innovation by solely regulating complex AI systems which are distinguished from simpler traditional software systems or programming approaches. These AI modules generate predictions that are used to create or enhance recommendations, decisions, and actions based on a set of human-defined objectives.

Such complex AI modules may be integrated into MID-regulated measuring instruments, thereby subjecting them to the AI Act's definitions and possibly also to its obligations. Regarding the AI Act’s risk classification, this AI product falls under the High-risk class if it is either listed under the relevant Union legislation in Annex I – because conformity assessment is already applied in that legal framework – or is covered by one of the eight application areas described in Annex III (Article 6). In case neither applies, it must be checked, whether the AI module falls into any of the four areas listed in Article 50. If so, the system must fulfil at least transparency obligations. In the end, all AI systems which are non-high-risk or don’t have to fulfil transparency obligations are invited to voluntarily commit to codes of conduct.

If such an AI module is a GPAI module, e.g. DeepSeek, it may constitute systemic risks at the union level and must address additional obligations according to Article 53 and Article 55.

This clearly illustrates that a system may meet the definition of AI systems, but is not necessarily obligated to fulfil any requirements.

MID measuring instruments only fall under the AI system definition of the European AI Act if they encompass an AI act-relevant module. On the one hand, this condition is fulfilled by a metrological function that benefits from such an AI act-relevant module. However, as long as the Commission is not amending Annex III by adding or modifying MID-relevant use cases of high-risk AI systems (Article 7), MID instruments end up fulfilling voluntary codes of conduct. Currently, the MID is under evaluation by the Commission. It is open if and how the AI Act impacts this process.

In addition, the conditions of the AI Act definition are fulfilled when a MID measuring instrument includes an AI module that generates non-metrological outputs, which may or may not utilize the results of the metrological functions. This may sound academic, but it presents a realistic case where high-risk obligations for the entire system might apply. Many member states are already confronted with such applications.

In that case, the MID instrument must fulfil both, the high-risk obligations of the AI-Act and the MID obligations. This amount of obligations encompasses conformity assessment, and market surveillance, e.g. potentially from two different Notified Bodies and different market surveillance institutions. Two distinct conformity assessment certificates will be issued. In the worst-case scenario, the coordination of the work is inadequate, resulting in redundant examinations.

Therefore, proper software separation between the metrological software and the non-metrological AI modules is highly recommended. In doing so, the non-metrological AI module is freed from MID obligations and vice versa. Software updates of the separated AI modules are supported without re-verification and even adaptiveness of the AI modules parameter after deployment is easily applicable.

We learned above that even if a metrological system may qualify as an AI system, the products regulated under the MID and NAWID do not necessarily have to fulfil obligations according to the AI Act.

Attributes qualifying the metrological system as an AI system under the AI Act may introduce functions and procedures into the MID quality infrastructure that are unconventional but must be manageable. Prominent examples are the functions described, e.g. by the terms ”autonomy”, “adaptiveness” or “inferencing”.

Terms like “autonomy” and “inferencing” are challenging to apply in legal metrology. On the other hand, terms like “adaptiveness” can be effectively applied in metrology, and their implications are already being addressed through appropriate OIML requirements in OIML D 31. However, it has to be noted that such self-adapting metrological systems currently do not exist. Furthermore, the AI modules currently used have been trained during the design phase and do not adapt during use. These systems are typically modified using established software download procedures and require recertification.

The Commission has clarified in its guidelines on the definition of an artificial intelligence system [23] that systems which have applied Machine learning-based models but have been used in a consolidated manner for a long time do not fall under the AI act’s definition. Furthermore, the systems already placed on the market or put into service before 2 August 2026 benefit from the ‘grandfathering’ clause foreseen in Article 111(2) AI Act.

Nonetheless, the use of AI modules as part of measuring instruments will increase. Furthermore, the potential metrological functions based on artificial intelligence and self-learning capabilities throughout the life cycle of measuring instruments remain open and hold great promise. They range from improving measurement accuracy and applications in the design and maintenance phase to supporting market surveillance and verification authorities and manufacturers by suggesting recertifications or maintenance intervals. However, enhancing measurement accuracy is constrained by legally mandated error margins, which set the boundaries for acceptable precision. Their further improvement is therefore aimed at special business models.

The integration of these AI modules in legal metrology modifies the software architecture of traditional measuring instruments significantly (neural nets vs. classical code; CPUs vs. GPUs). As a result, the skills, procedures, and tools required for software testing need to be further developed both scientifically and technically, e.g. [11, 15, 18]. This is crucial for ensuring high-quality software testing as part of the conformity assessment process for measuring devices in parallel with the ongoing technological development of software systems.

The advice and guidelines stemming from the new governance structure, i.e. the AI Office and the AI Board as well as the harmonized standards developed by CEN and CENELEC, are shaping the state of the art in regulating innovative software systems. This could also facilitate the updating of the existing standards and guidelines in legal metrology. A prominent example of the successful transfer of such measures are the new obligations laid down in OIML D 31, which will also be transferred to the European WELMEC guide 7.2 “Software”.

To ensure measurement accuracy and fairness in commercial transactions the EU Artificial Intelligence Act references legal metrology in Recital 74 and Metrology in Article 15 (2) with the aim to encourage the Commission’s collaboration with international partners working on metrology and relevant measurement indicators relating to AI. As a result, the Commission, WELMEC, and OIML may take initial steps to further support this development in close cooperation with organizations such as the EU AI Office.

The European AI Act is a starting point for a new model of governance centred around European values and technology.

7. Acknowledgements

The author wishes to express his gratitude to Dr. Marko Esche, head of PTB's working group "Metrological Software," and Martin Nischwitz, Co-Convenor of WELMEC Working Group 7 "Software" and convenor of Working Group 7’s "New Technologies" subgroup, for the fruitful discussions and helpful remarks.

Declaration of interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

[1] F. Thiel, Digital transformation of legal metrology – The European Metrology Cloud, OIML Bulletin LIX(1), 10-21

[2] F. Thiel, and J. Wetzlich: “The European Metrology Cloud: Impact of European Regulations on Data Protection and the Free Flow of Non-Personal Data”, 19th International Congress of Metrology, 01001 (2019). doi: 10.1051/metrology/201901001

[3] Dohlus, M. Nischwitz, A. Yurchenko, R. Meyer, J. Wetzlich and F. Thiel, 2020, Designing the European Metrology Cloud, OIML Bulletin LXI(1), 8-17.

[4] Artificial Intelligence in Society, OECD Publishing, Paris. doi: 10.1787/eedfee77-en.

[5] Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 laying down harmonised rules on artificial intelligence and amending Regulations (EC) No 300/2008, (EU) No 167/2013, (EU) No 168/2013, (EU) 2018/858, (EU) 2018/1139 and (EU) 2019/2144 and Directives 2014/90/EU, (EU) 2016/797 and (EU) 2020/1828 (Artificial Intelligence Act)

[6] Future of Life Institute; The AI Act Explorer; https://artificialintelligenceact.eu/ai-act-explorer/; 2024

[7] SB-1047 Safe and Secure Innovation for Frontier Artificial Intelligence Models Act. California State Legislature, USA, Bill Status – SB-1047 Safe and Secure Innovation for Frontier Artificial Intelligence Models Act. (ca.gov), 08/29/2024

[8] EU Artificial Intelligence Act (Regulation (EU) 2024/1689)

[9] Commission Notice: The ‘Blue Guide’ on the implementation of EU product rules 2022 (2022/C 247/01), https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=OJ:C:2022:247:TOC

[10] European Commission, Interim Evaluation of the Measuring Instruments Directive – Final report – July 2010, http://ec.europa.eu/DocsRoom/documents/6584/attachments/1/translations

[11] Esche, M., Meyer, R. and Nischwitz, M., 2021, Conformity assessment of measuring instruments with artificial intelligence, Datenschutz Datensich 45, 184-189. doi: 10.1007/s11623-021-1415-4

[12] Kuzin, A.Y., Kroshkin, A.N., Isaev, L.K. et al., 2023, Practical aspects of applying artificial intelligence in metrology, Meas. Tech. 66, 717-727. doi: 10.1007/s11018-024-02285-2

[13] Tameem Adel, Sam Bilson, Mark Levene and Andrew Thompson;, 2024, Trustworthy Artificial Intelligence in the Context of Metrology, In Producing Artificial Intelligent Systems: The roles of Benchmarking, Standardisation and Certification, Studies in Computational Intelligence, edited by M. I. A. Ferreira, Springer. doi: 10.48550/arXiv.2406.10117

[14] Ana Gleice da Silva Santos, Luiz Fernando Rust Carmo and Charles Bezerra do Prado, 2024, Machine learning in legal metrology – detecting breathalyzers' failures, Meas. Sci. Technol. 35, 045015. doi: 10.1088/1361-6501/ad1d2c

[15] J. Litzinger, J. Neumann, D. Peters and F. Thiel, 2024, Streamlining Conformity Assessment of Software applying Large Language Models, in Measurement: Sensors 2024, doi: 10.1016/j.measen.2024.101792

[16] A. Oppermann, F.G.rasso Toro, F. Thiel and J.-P. Seifert, 2018, Secure cloud computing: Continuous anomaly detection approach in legal metrology, IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Houston, TX, USA, pp. 1-6, doi: 10.1109/I2MTC.2018.8409767

[17] BSI, Technische Richtlinie BSI TR-03109-1, Anlage VI: Betriebsprozesse mit Beteiligung des SMGW, version 2.0, 13.12.2024, Technische Richtlinie BSI TR-03109-1 – Anlage VI: Betriebsprozesse mit Beteiligung des SMGW – Version 2.0 – 13.12.2024

[18] OIML D 31:2023, General requirements for software-controlled measuring instruments

[19] WELMEC Guide 7.2 “Software”, https://www.welmec.org/guides-and-publications/guides/

[20] ISO/IEC 22989:2022(en), Information technology – Artificial intelligence – Artificial intelligence concepts and terminology

[21] OECD (2022), "OECD Framework for the Classification of AI systems", OECD Digital Economy Papers, No. 323, OECD Publishing, Paris, doi: 10.1787/cb6d9eca-en.

[22] OECD (2024), "Explanatory memorandum on the updated OECD definition of an AI system", OECD Artificial Intelligence Papers, No. 8, OECD Publishing, Paris, doi: 10.1787/623da898-en

[23] European Commission, C(2025) 924 final, Approval of the content of the draft Communication from the Commission – Commission Guidelines on the definition of an artificial intelligence system established by Regulation (EU) 2024/1689 (AI Act), 6.2.2025, pdf

[24] The European Law Institute (ELI), 2024, “The concept of ‘AI system’ under the new AI Act: Arguing for a Three-Factor Approach”, pdf, ISBN: 978-3-9505495-3-9

[25] Claudio Novelli, Philipp Hacker, Jessica Morley, Jarle Trondal, and Luciano Floridi, 2024, A Robust Governance for the AI Act: AI Office, AI Board, Scientific Panel, and National Authorities (May 5, 2024). Available at SSRN: https://ssrn.com/abstract=4817755 or doi: 10.2139/ssrn.4817755

[26] WELMEC Guide 7.6 “Software Risk Assessment for Measuring Instruments” https://www.welmec.org/guides-and-publications/guides/

[27] WELMEC Guide 7.3 “Reference Architectures – Based on WELMEC Guide 7.2” https://www.welmec.org/guides-and-publications/guides/

[28] WELMEC Guide 7.4 “Exemplary Applications of WELMEC Guide 7.2” https://www.welmec.org/guides-and-publications/guides/

[29] WELMEC Guide 7.5 “Software in NAWIs (Non-automatic Weighing Instruments Directive 2014/31/EU))” https://www.welmec.org/guides-and-publications/guides/

[30] Directive 2014/31/EU of the European Parliament and of the Council of 26 February 2014 on the harmonisation of the laws of the Member States relating to the making available on the market of non-automatic weighing instruments”, Official Journal of the European Union L 96/107, 29.3.2014

[31] Directive 2014/32/EU OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL of 26 February 2014 on the harmonisation of the laws of the Member States relating to the making available on the market of measuring instruments (recast), Official Journal of the European Union L 96/149, 29.3.2014

[32] M. Esche and F. Thiel, 2015, Software Risk Assessment for Measuring Instruments in Legal Metrology” Federated Conference on Computer Science and Information Systems (FedCSIS) 2015 DOI: 10.15439/2015F127

[33] M. Esche and F. Thiel, 2016, Incorporating a Measure for Attacker Motivation into Software Risk Assessment for Measuring Instruments in Legal Metrology, 18. GMA/ITG-Fachtagung Sensoren und Messsysteme. doi: 10.5162/sensoren2016/P7

[34] M. Esche, F. Grasso Toro and F. Thiel, 2017, Representation of Attacker Motivation in Software Risk Assessment Using Attack Probability Trees, Federated Conference on Computer Science and Information Systems (FedCSIS) Source: https://annals-csis.org/Volume_11/drp/pdf/112.pdf

[35] M. Esche, F. Grasso Toro, 2020, Developing defence strategies from attack probability trees in software risk assessment, Proceedings of the 2020 Federated Conference on Computer Science and Information Systems: 21, 527-536. doi: 10.15439/978-83-955416-7-4

[36] M. Esche, F. Salwiczek and F. Grasso Torro, 2020, Formalization of Software Risk Assessment Results in Legal Metrology Based on ISO/IEC 18045 Vulnerability Analysis, Federated Conference on Computer Science and Information Systems: 21, 527-536, ISSN 2300-5963 ACSIS, Vol. 18. doi: 10.15439/2019F84

[37] Mohamed Khalifa, and Mona Albadawy, 2024, AI in diagnostic imaging: Revolutionising accuracy and efficiency, Computer Methods and Programs in Biomedicine Update, Volume 5, 2024, ISSN 2666-9900. doi: 10.1016/j.cmpbup.2024.100146